Retrieving the Procs

I recently tasked myself with setting up DbUp to add database migrations to a .NET project at work. It was straightforward enough to generate the table definitions with DataGrip but I needed to get my hands dirty to create the stored procedures. To get a lay of the land, I checked out my favorite schema in SQL Server, INFORMATION_SCHEMA.

SELECT * FROM INFORMATION_SCHEMA.ROUTINES

The columns I care about here are ROUTINE_NAME and ROUTINE_TYPE, the latter which I want to make sure is always “PROCEDURE”, which it is for my case. (yay) ROUTINE_DEFINITION is also worth paying attention to, but it’s capped at 4000 characters so I need to query the sys schema to make sure I get the full procs. Below is the information I need.

SELECT 'NEW PROC STARTS HERE', o.name, sm.definition FROM sys.sql_modules sm

INNER JOIN sys.objects o

ON sm.object_id = o.object_id AND o.TYPE = 'P'

I take this output in DataGrip and download it to a TSV I can parse later to create a set of SQL scripts that can be run to create the procs in my database.

Creating Temporary Proc Files

At this point it’s worth noting that if I had a SQL GUI that let me select all the procs and download them to a set of scripts, I would totally just do that. So I want to re-iterate what I’m trying to accomplish so I don’t go down a rabbit hole:

- Each proc needs to be its own SQL script named after the proc

- I want to parse this TSV without altering it

- I shouldn’t have to do this again

That last point is worth discussing — it’s true I won’t have to do this again for this project (since future procs will be in source control and using DbUp for change management), however if this were worth turning into a generic tool then I’d want to make this parsing code re-runnable. I used to work at a company that would’ve definitely benefited from such a process, but alas they never encouraged me to go down this path and clean-up their bad practices.

Exploratory Parsing

To kick things off, I looked for my NEW PROC STARTS HERE text to figure out what kind of formats I had to deal with. In short, it looked a bit like this:

NEW PROC STARTS HERE SelectSomeStuff "create procedure

The only thing I could count on was that after that first double-quote, the proc would actually begin. Then we’d have newlines that would get introduced that would be part of the proc. We’d only know we were at the end when we got to another NEW PROC STARTS HERE delimiter.

The Script

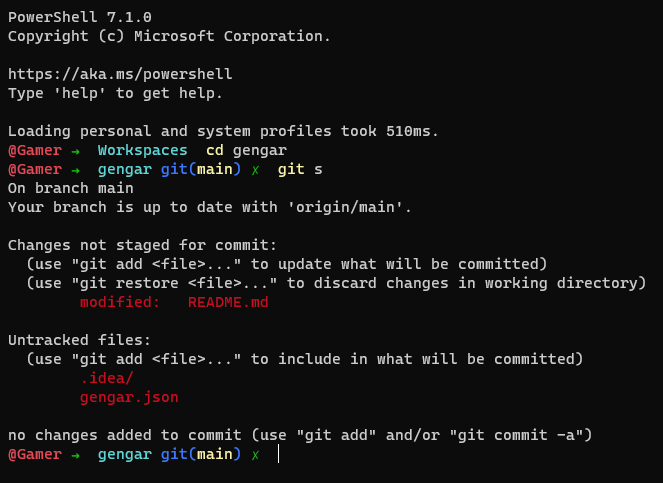

Here’s what I cobbled together using the guidelines above.

class ProcFile

attr_accessor :name, :contents

def initialize()

@contents = ""

end

def write_to_file

File.open("#{@name}.sql", "w") do |f|

f.write(@contents)

end

end

end

proc_start = "NEW PROC STARTS HERE"

new_proc = ProcFile.new()

File.readlines("procs.tsv").each do |line|

line.sub!("CREATE PROCEDURE", "CREATE OR ALTER PROCEDURE")

if line.include?(proc_start)

# NEW PROC STARTS HERE\tProcName\t"This Is The Proc

r = /^NEW PROC STARTS HERE\t(.+)\t"(.+)$/

unless new_proc.contents.empty?

new_proc.write_to_file()

end

match_data = r.match(line)

new_proc = ProcFile.new()

new_proc.name = match_data[1]

new_proc.contents = match_data[2]

else

new_proc.contents += line unless line =~ /^"$/

end

end

# write final file

new_proc.write_to_file()

Translating to a DbUp-Friendly Structure

Here’s the CLI tool syntax I wrote to create new database migrations from scratch:

$ customtool generate migration --name CreateNewTable

And this would create something like 20200214200931_CreateNewTable.sql and put it in the Migrations directory and everyone would rejoice. But unlike table definitions and other types of migrations I’d like to run, I want to treat my procedures more like code where the whole thing gets re-run anytime I know it changes. Therefore, my tool needs a new syntax.

$ customtool generate procedure --name SelectSomeStuff

This command instead deposits generated procs in the Procedures directory. Order no longer matters, so there’s no timestamp component to the filename. And when I want to run them, I just do:

$ customtool deploy procedures

Caveats

It should be obvious, but I want to note this here — the script I wrote mostly worked. It didn’t quite work when the SQL syntax wasn’t capitalized correctly or spaced consistently through all the scripts. To test the stored procedures I converted, I ran them locally and made an assumption that any conversion issues would show up as syntax errors and not still be valid SQL. You’re always better off doing a healthy dose of testing — even though this is still in-progress for me, I plan to run the CREATE OR ALTER on the lower environments so we can verify that everything works as intended now and not have to worry about it later if I run all the procs and introduce some small bug.

It’s also worth noting that this is exactly why stored procedures aren’t popular in frameworks like Ruby on Rails. They’re so difficult to test and do change management on! I really only encountered them when I entered .NET land, and then I was horrified how often engineers I worked with thought they were a good idea to implement. Yes, you can get performance gains from them. But you’re almost always better off doing something else that’s a bit more testable, just so you can sleep at night.

Addendum: System.CommandLine

Wasn’t going to write much here, except to say that I’m using the new System.CommandLine library to write my CLI tool. It’s still in pre-release but the functionality it currently provides is more than enough for me to write my tool without incurring too much of a headache either parsing the input myself (i.e. I don’t use a library) or learning a confusing library API, which was my experience with Command Line Parser.